Testing Kalman filter

To start with, let's use an empty array of sensors. It means that our robot will go blindly, and the error should increase uncontrollably:

Let's add GPS sensor

Now robot "knows" where it is, and the error is (as expected, because we set it so) around 10 m.

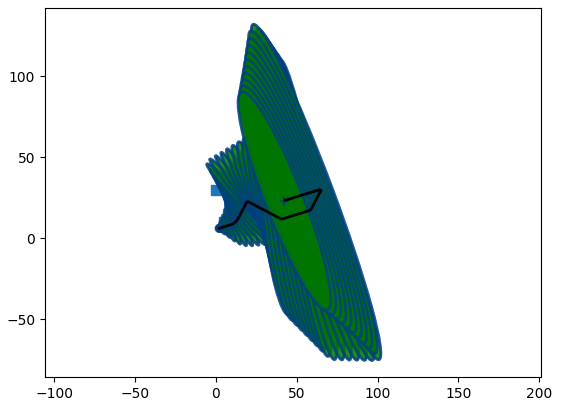

Let's turn off GPS and use IMU. Note that IMU is an internal sensor, so its error should accumulate.

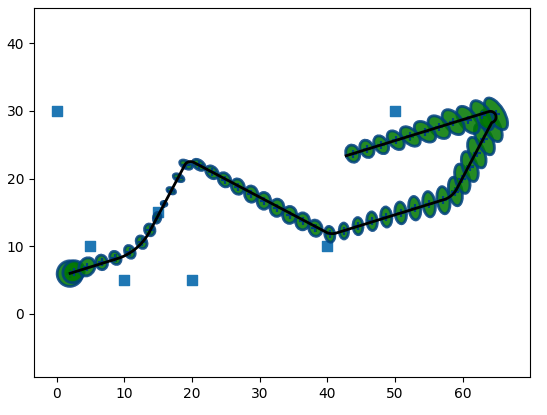

Let's use landmarks alone. Note that, compared to "the book", I have specified much larger sensor errors, plus in code, approximately half of landmarks are marker as hidden. Nevertheless, result is quite good. Also note as when the robot moves away from the cluster of landmarks, the uncertainty increases, and as it moves back, it decreases again:

Now let's use GPS and IMU. Notice that filter performs better than with either of these sensors alone:

Finally, let's use all available sensors. The result is even better:

To conclude, let me mention why I only used some available data, for example, why IMU speed data was ignored. The answer is: simplicity. In the next section I am going to add the missing sensor inputs, to make the code ready for data ROS2 can provide.

Also, it is important to understand what should and what should not be used as sensor data. For example, the robot uses dif. drice controller, and as it "knows" wheel speed, it can publish speed and steering angle. Plus, as it "knows" the wheel rotation, it can publish the coordinates. However, this is not sensor data. It is, to some extent, a "controlling command", but we have sent these same commands to dif. drive already, and informed the robot about it... so we do not have to use it again. Kalman filter "does not like" data that is correlated, and it can create problems.

The next big chunk of possible improvements is related to landmarks, but not in a traditional sense. Imagine that our robot runs on the road. We know it is on the road, right? And in the same time, the "uncertainty spot" Kalman returns covers both part of the road and some land outside. But we KNOW we are on the road, so we can make our localization way more accurate.

You can see that trick used in GPS navigators: the car icon stays on the road, even though the GPS "spot" is much wider. But using this approach with Kalman filter is very difficult.

What exactly is an intersection of Kalman's Gaussian with the segment of a road? For filter to continue to work, it should be another Gaussian... which it is not. What if road bends? Even "less Gaussian"! What if there are two close roads, and "GPS spot" overlaps both of them? What if robot (or car) drives under the bridge, and there is a second road under it? Which one to choose?

In this case we need to keep robot's history: if we were driving on road A, we should not assume that we suddenly jumped to road B.

Actually, at some points this approach can give us even more accuracy: we can assume that slowing down is related to crossing intersections, and when we turn, we can suggest both x and y coordinates of a robot... But it requires a really complex code.

I am not going to handle "road matching", at least not in the nearest future. Let me just mention that it can be done by running a separate chunk of code for matching, and using it to adjust robot's coordinates. So it is like an extra sensor, but if you want to use it with Kalman filter, you should always keep in mind its non-Kalman properties.

In the next section I will handle the data I didn't use here. Then I am going to put it all into the 3d world, as right now we do not use z coordinate, pitch and tilt. Then I will add it all to a ROS2 package.