Home

Tutorials

Sensors in Gazebo

The code for this section is located as navigation_bot_02 here.

Let's add some sensors to a robot that we have created in a previous section. Particularly, we will add a lidar sensor and a depth camera (note: we are still following the official "sam_bot" tutorial, except, we do it step by step).

lidar.xacro

In the code snippet above, we have created a lidar_link which is then referenced by the gazebo_ros_ray_sensor plugin. We have also set values to the simulated lidar's scan and range properties. Lastly, we set the /scan as the topic to which it will publish the sensor_msgs/LaserScan messages.

depth_camera.xacro

Next, let us add a depth camera. We are going to create a depth_camera.xacro file:

robot.urdf.xacro

The two sensors that we have defined above, have to be included in the "main" URDF file of our project (robot.urdf.xacro):

Build, Run and Verification

# In a first Terminal:

$ ros2 run teleop_twist_keyboard teleop_twist_keyboard

# In a second Terminal:

$ ~/SnowCron/ros_projects/harsh

$ colcon build --packages-select navigation_bot_02

$ source install/setup.bash

$ ros2 launch navigation_bot_02 launch_sim.launch world:=src/worlds/maze.sdf

Now we can use keyboard commands to move our robot around.

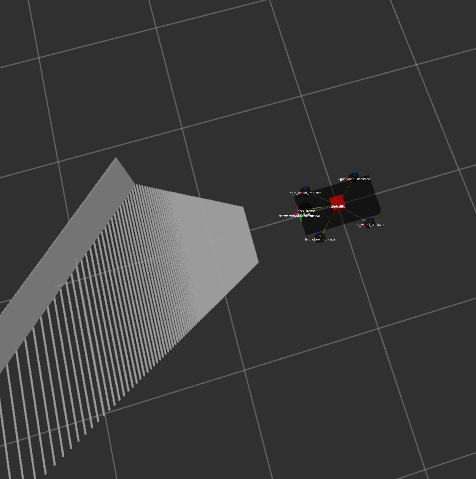

In the RViz window, we can verify if we have properly modeled our sensors. To visualize the sensor_msgs/PointCloud2, click Add button at the bottom, select by topic and sensor_msgs/PointCloud2:

Just to make it clear, on the picture we see point cloud for the wall the depth camera is pointing to.